Introduction: Shadow AI – The Quiet Danger Most Enterprises Don’t See Coming

AI is transforming the enterprise—automating workflows, speeding the decision-making process, and inspiring innovation. However, there is a new problem surfacing in 2025: Shadow AI.

Similar to Shadow IT in the early 2010s, Shadow AI are unauthorized AI tools or models used in an organization without the oversight of IT and compliance teams.

While the democratization of AI has allowed teams to move quicker, it has also introduced unseen risks:

- Unvetted models using sensitive data

- Compliance breaches with no audit trail

- Inconsistent outcomes and model drift

In this blog we will discuss what Shadow AI is, why it is proliferating, and most importantly, how you can mitigate it through effective governance, policies and technical controls.

What is Shadow AI?

Shadow AI is defined as any AI application, model, or third-party AI tool utilized without formal approval, integration, or oversight of an organization’s central IT, security, or governance teams.

Common examples of Shadow AI:

- Employees using ChatGPT or AI tools to process sensitive information

- Data scientists training models on cloud platforms without using an approved infrastructure

- Departments procuring third-party AI SaaS tools also without presenting it to IT

While people may have good intentions (speed, innovation, experimentation), the risks are very real—and are increasing.

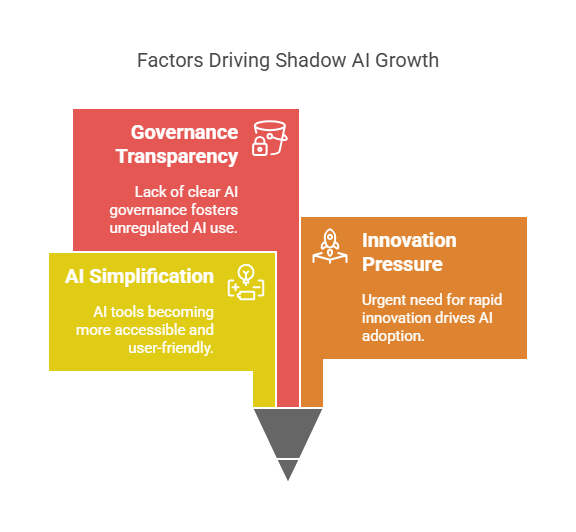

Reasons for the Rise of Shadow AI in 2025

Several things are causing Shadow AI to rise.

1. AI is getting simpler.

Most of the technologies that allow access to AI, like low-code platforms, open-source models, and APIs, allow users to build and deploy AI faster than ever, without help from central IT teams.

2. Pressure to speed innovate.

Many teams want speed, automation, and all things AI to gain an advantage—sometimes to the detriment of the slower review process.

3. AI Governance lacks transparency.

A good share of organizations still are not clear where their policies are, what is the workflow of approvals, or still don’t even have a list of approved AI models (informal registry).

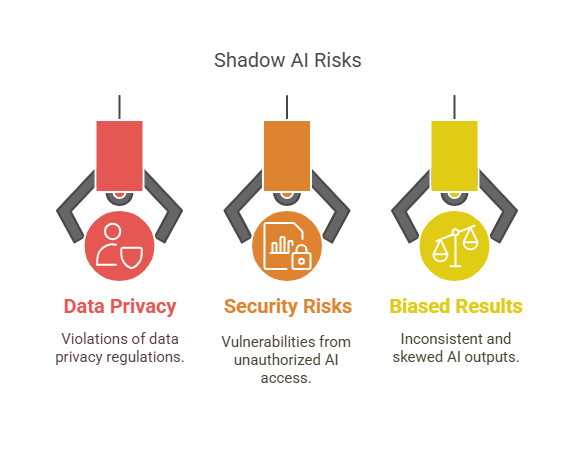

The Hidden Risks of Shadow AI in Enterprise Automation

Different set of vulnerabilities arise when you let AI run in the shadows:

1. Data Privacy and Compliance Violations

Unapproved AI tools may incur regulatory violations, including GDPR, CCPA or the EU AI Act in many ways:

- Data is stored on an unapproved cloud service

- Personal or regulated data is processed

- Machine learning deliverables don’t include explainability or audit logs

2. Security Risks

AI models that run outside of the organization’s footprint could also:

- Lack appropriate access control

- Be exposed to adversarial attacks

- Leverage or disclose sensitive business logic or proprietary data

3. Inconsistent or Biased Results

Shadow AI models likely include:

- Utilization of unworked, unvalidated data

- No version controls

- No concepts of favorability, explainability or testing

This erodes trust in automation and increases the risk of biased decisions.

How to Find Shadow AI in Your Organization

Before you can stop Shadow AI, you have to find it.

Here are some ways to do that:

- Conduct an AI asset audit: For the audit, survey teams to record AI models, tools, and platforms employed throughout your organization.

- Monitor network activity: Watch for connections to publicly available AI tools and unregistered (unapproved) services.

- Conduct departmental interviews: Get a sense for the kind of AI being employed and under what circumstances in everyday workflows.

- Study expense reports: Shadow AI often appears in team-based SaaS purchases.

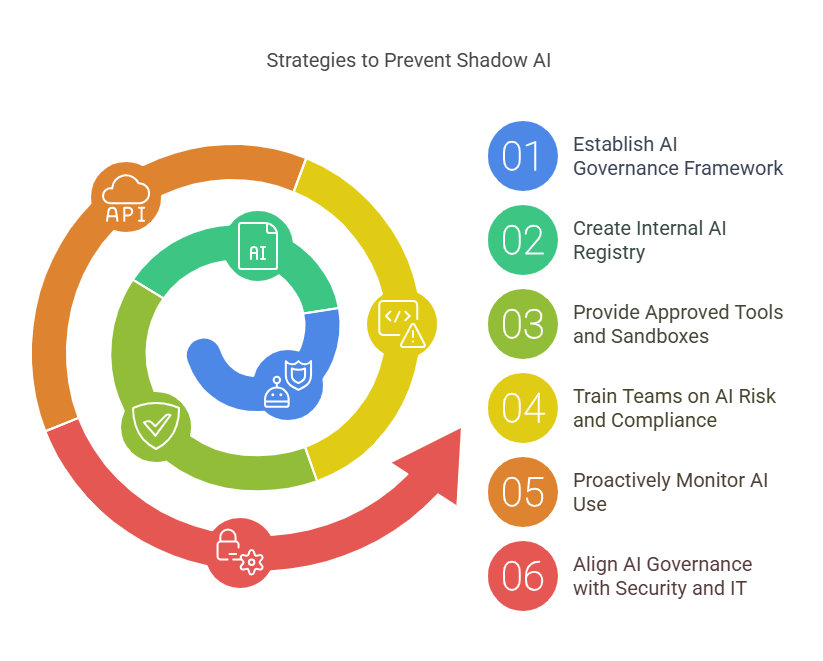

How to Prevent Shadow AI: 6 Key Strategies

1. Establish an AI Governance Framework

Create an AI governance strategy that clearly describes:

- Who can build or deploy AI

- The approvals and documentation required

- The ownership and maintenance responsibilities

2. Create an Internal AI Registry

Establish a central repository of all AI models, tools, APIs, and datasets utilized by your teams. This will become a source of truth for your AI assets and will assist with tracking:

- Model lineage

- Data provenance

- Performance and drift

3. Provide Approved Tools and Sandboxes

Enable teams to innovate–safely. Help teams access approved platforms for example:

- Internal model development sandboxes

- Verified 3rd party AI tools

- Pre-trained models using compliant filters

4. Train Teams on AI Risk and Compliance

Not all Shadow AI is malicious. Often it is nothing more than a lack of awareness. Train your teams on:

- Data privacy

- Responsible AI

- Security protocols for deploying models

5. Proactively Monitor AI Use

Using tools that are able to:

- Detect when models are being trained or inferred outside of the approved environment

- Detect compromised API access when communicating with an unapproved API

- Analyze usage in the cloud environments for AI activity

6. Align AI Governance with Security and IT

Shadow AI mitigation is not just a data science issue, it takes collaboration from:

- AI/ML teams

- IT & Security

- Compliance

- Legal

Ensure that policies and enforcement are aligned across these groups.

Real-World Example of a Global SaaS Company Addressing Shadow AI

A public company (operating globally) in the SaaS realm learned that several product and marketing teams were using third-party AI content generators and analytics tools without informing IT.

Here is the game plan:

- Conducted a comprehensive audit of how AI was used.

- Created an internal approval process and registry.

- Created a marketplace of approved AI tools.

- Created monitoring for user access.

Result: Increased transparency, reduced compliance risk, and faster and safer AI innovation across these teams.

Conclusion: Shadow AI is Preventable

With the expansion of AI, so must governance.

The risks posed by Shadow AI—from data exposures to ethical catastrophes—are simply too significant to be ignored. With the right combination of good policy, adequate tools, proper training, and appropriate oversight, unauthorized AI can be prevented from jeopardizing your digital transformation.

Stop Shadow AI fast — get expert help

FAQs

Q: What is Shadow AI?

Shadow AI refers to AI tools or models used within an organization without formal approval, tracking, or oversight.

Q: Why is Shadow AI a problem?

It can lead to security vulnerabilities, compliance violations, biased outputs, and inconsistent decision-making.

Q: How can companies detect Shadow AI?

Through asset audits, network monitoring, team interviews, and reviewing expenses for unauthorized AI usage.

Q: What’s the best way to prevent Shadow AI?

Establish governance policies, provide approved tools, monitor usage, and educate teams on responsible AI.

Q: Is Shadow AI common in 2025?

Yes, it’s growing rapidly due to the rise of accessible AI platforms, low-code tools, and pressure to automate.