Introduction: Why AI Security is Unquestionable in 2025

AI automation is transforming industries, and as the saying goes, “with great power comes great responsibility.” As businesses grow faster and faster with intelligent systems at their core, questions around AI data privacy, AI compliance, and risks around security of AI automated processes have skyrocketed. Without strong guardrails, you may expose sensitive data, violate compliance, or—worse—create mistrust with your customer base.

This blog will serve as your roadmap on building secure, compliant, and trusted AI automation systems.

The Problem: The Expanding Security Gap Surrounding AI

AI super agents and automation technologies now consume massive amounts of customer data and data about how businesses run.

Here’s the problem:

“AI systems are only as secure as the pipelines and data flows that fuel them.”

However, most organizations:

- Do not consider the risk associated with AI before it is deployed.

- Do not think through the ethical and regulatory implications of AI.

Do not put in place governance related to AI automation.

Common AI automation threats include:

- Data leaks attributed to poor access control.

- Bias in AI models causing compliance risks.

- Deliberate or unintended act by autonomous systems.

If organizations take a proactive approach to take a look at these some of these risks, they can avoid:

- Regulatory fines (GDPR, HIPAA).

- Loss of customers due to breaches or unethical AI use.

Lack of innovation due to lack of trust.

The Agitate: The Cost of AI Security Ignorance

What is the worst that can happen if you do not bake security into your AI automation?

Here is a glimpse of the real world:

- In 2024, a fintech startup faced a $3.2M penalty after its chatbot leaked some client’s financial information.

- A healthcare company could not pass a HIPAA audit because of untraceable decisions made by AI.

- An e-commerce company’s AI was recommending products based on an algorithm resulting in discriminatory pricing and enraged the public.

The Solution: A Security-First AI Automation Framework

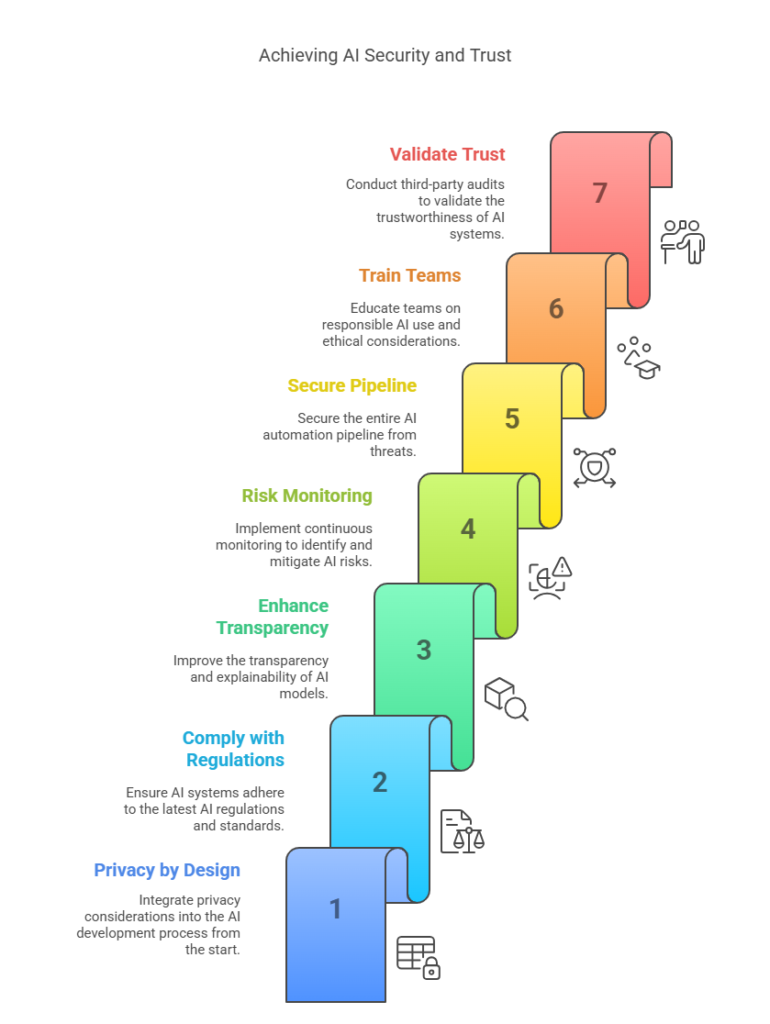

1. Develop with Privacy by Design

Don’t add privacy—develop it into your workflows.

Important Practices:

- Limit data exposure—anonymization and encryption

- Role-based access for all AI inputs/outputs

- Limit data retention in model training

2. Comply with New AI Regulations

There are new AI laws—fast.

Key Compliance Areas:

- GDPR (EU), HIPAA (US), AI Act (EU 2025)

- Model explainability requirements

- Audit of automated decisions

3. Improve AI Model Transparency & Explainability

AI is a black box—but it doesn’t have to be.

Suggestions for Improvement:

- Use interpretable models where possible

- Visualize the decision path using LIME / SHAP etc.

- Document model assumptions and limitations

4. Institute Continuous AI Risk Monitoring

AI systems change – so should your monitoring.

Things to Monitor:

- Input data drift

- Model performance over time

- Anomalies or unintended actions

5. Secure the Automation Pipeline

It’s not just the AI model – it’s the entire pipeline.

Secure Every Layer:

- API authentication & access tokens

- Logging + audit logging for everything that is automated

- Endpoint security for agent actions

6. Train Teams on Responsible AI Use

It’s not the tool, it’s the person.

Make it Real:

- AI security workshops

- Role specific governance policies

- Incident response scenarios

7. Validate Trust with Third-Party Audits

Independent auditing = credibility.

How to Proceed:

- Partner with AI governance consultants

- Leverage audit logging & version control systems

Conduct bias audits and fairness assessments

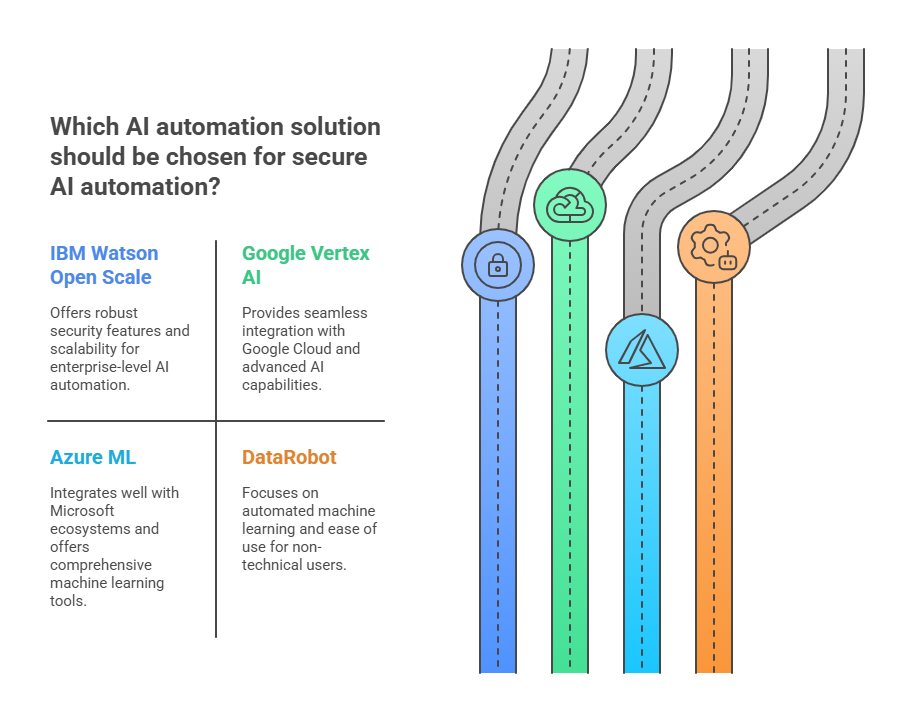

Solutions that Suggest Secure AI Automation

- IBM Watson OpenScale – For detecting bias and fairness

- Google Vertex AI – Model governance options

- Azure ML – Role-based access/permission and explainability dashboards

- Data Robot – Monitoring + audit options

Use Case: Securing AI in Action

Use Case – Healthcare AI solution

Problem– AI solution processing patient intake forms in a clinic had a risk of HIPAA violation.

Solution – Implemented data anonymization, established audit trails, and used explainability tools.

Result– Passed third-party audit, lowered risk score by 40%, and improved patient trust.

Common Mistakes to Avoid

- Ignoring model fairness and bias assessments

- Failure to keep a record of AI activities/zero traceability tracking

- Using black-box models for regulated decisions

- Believing that vendor manages all security

Conclusion: Trustworthy AI is Secure AI

AI automation should scale your business—not your risk.

If you incorporate AI security, compliance, and governance into each layer of your intelligent systems, you don’t just meet the standard—you exceed it.

📩 Book your free AI Security Consultation with Codepaper and protect your systems before it’s too late.

FAQs

Q: Why is AI automation security important for businesses in 2025?

AI systems now process vast amounts of sensitive customer and operational data. Without proper safeguards, businesses risk data breaches, non-compliance with AI regulations, and loss of customer trust. Prioritizing AI security ensures long-term scalability, reputation protection, and innovation readiness.

Q: What are the top risks in AI automation systems?

The biggest threats include data leakage, biased AI models, unexplainable decisions, and automation errors that could trigger non-compliant or unethical outcomes. Implementing AI risk assessment tools and secure automation pipelines helps mitigate these issues.

Q: How can companies ensure compliance with AI regulations like the EU AI Act or HIPAA?

By embedding AI compliance best practices, such as explainable AI models, regular audits, and clear documentation of decision logic. Partnering with legal and AI governance experts can help you navigate complex rules like GDPR, HIPAA, and the upcoming EU AI Act 2025.

Q: What is “privacy-first AI automation”?

Privacy-first AI automation means building systems where data privacy is prioritized at every layer—from design and data collection to model training and decision output. It includes data minimization, encryption, and access control mechanisms.

Q: Can automation pipelines be a security risk?

Absolutely. If your automation pipeline lacks encryption, role-based access, or logging, it can be exploited. Secure your pipeline by implementing API authentication, audit trails, and endpoint security checks.